|

Yixin Song 宋奕欣 Bringing AGI to life with local GPU 💓 Ph.D., SJTU yixinsong@sjtu.edu.cn / jeremysyx@gmail.com

I am a Ph.D. candidate at Shanghai Jiao Tong University (SJTU),

focusing on Large Language Models (LLMs).

I am a member of the Institute of Parallel and Distributed Systems (IPADS),

where I am fortunate to be supervised by Prof. Zeyu

Mi and Prof. Haibo Chen.

My research focuses on algorithm and hardware co-design for efficient model serving and training systems. CV / Google Scholar / HuggingFace / Github |

|

News

|

Selected Publications

*Equal contribution. |

|

|

Powerinfer: Fast large language model serving with a consumer-grade gpu

Yixin Song, Zeyu Mi, Haotong Xie, Haibo Chen SOSP, 2024 project page / arXiv / code

PowerInfer is a CPU/GPU LLM inference engine leveraging activation locality for your device. |

|

|

PowerInfer-2: Fast Large Language Model Inference on a Smartphone

Zhenliang Xue*, Yixin Song*, Zeyu Mi, Le Chen, Yubin Xia, Haibo Chen arxiv, 2024 project page / arXiv PowerInfer-2 is a highly optimized inference framework designed specifically for smartphones. |

|

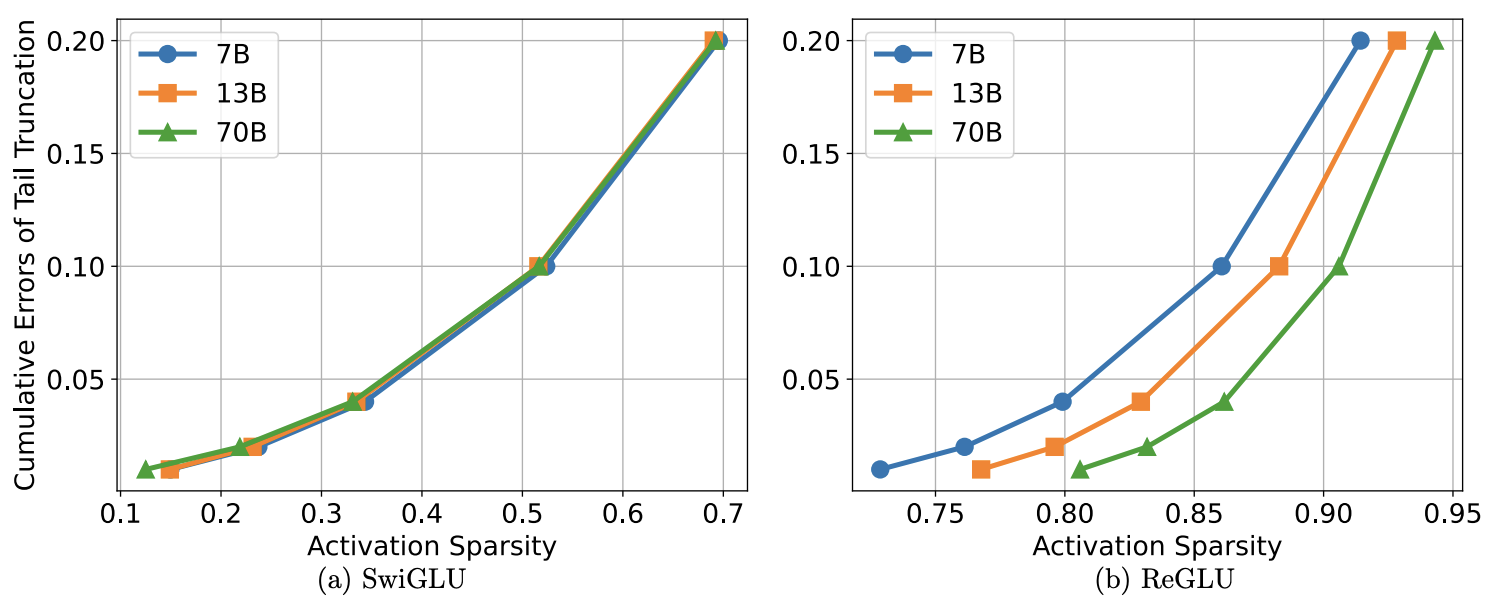

ReLU^2 Wins: Discovering Efficient Activation Functions for Sparse LLMs

Zhengyan Zhang* Yixin Song*, Guanghui Yu, Xu Han Yankai Lin, Chaojun Xiao, Chenyang Song, Zhiyuan Liu, Zeyu Mi, Maosong Sun arxiv, 2024 aixiv We introduce a general method that defines neuron activation through neuron output magnitudes and a tailored magnitude threshold, demonstrating that non-ReLU LLMs also exhibit sparse activation. |

|

|

Bamboo: A New 7B Mistral-level Open LLM with High Sparsity

Yixin Song, Haotong Xie, Zeyu Mi, Li Ma, Haibo Chen project, 2023 github We introduce Bamboo-v0.1, a new 7B LLM that boasts high sparsity while delivering performance equivalent to Mistral-7B. This repo provides the details of the model. |

Working Experience |

|

Shanghai AI Laboratory

Researcher Intern, 2022.10 ~ Present |

Miscellanea |